Cognitive Biases

Nonlinear perception

Perception of gain and loss

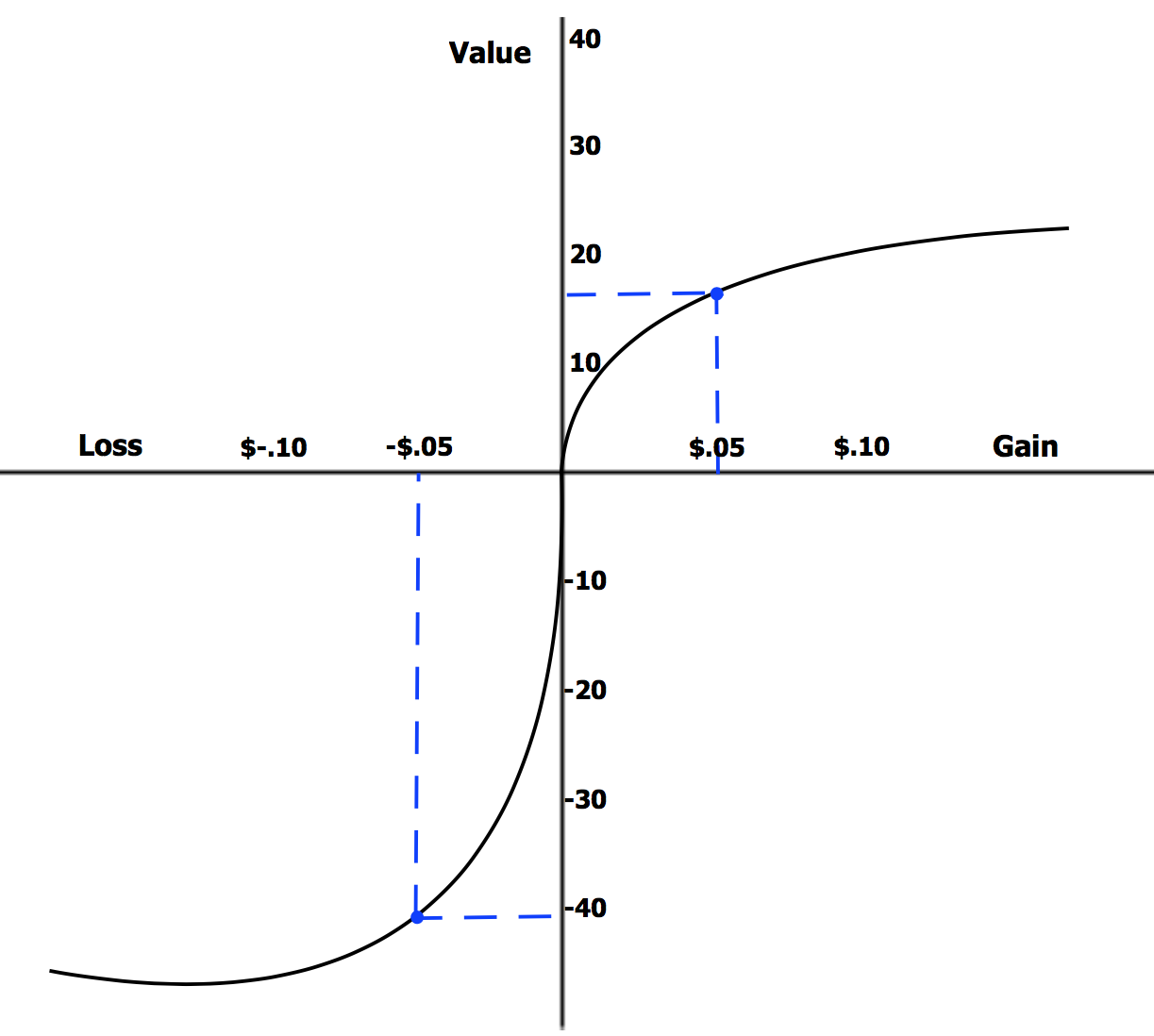

Diminishing marginal utility: The more of something you have, the less utility another such thing has. For example, one is hungry and then eats 3 pieces of bread, the first piece eaten while hungry is has more utility than the second piece eaten after the first, and so on.

Corresponding to diminishing marginal utility, the happiness of gaining $200 is less than two times of happiness of gaining $100. The perception of gain is convex.

The same applies to pain. The pain of losing $100 two times is higher than losing $200 in one time.

Weber-Fechner law: Human's sensor perception is roughly logarithmic to the actual value.

Expectation and framing

The "gain/loss" is relative to the expectation (frame of reference). Different people have different expectations in different scenarios.

Expectation management is important. If the outcome is good but doesn't meet the high expectation, it still causes disappointment. Vice versa.

The expectation can gradually change. People gradually get used to the new norm. This make people be able to endure bad environments, and not get satisfied after achievement.

Shifting baseline syndrome (boiling frog syndrome): If the reality keeps changing slowly, the expectation also tend to keep nudging, eventually move a lot without being noticed. This is also common in long-term psychological manipulation.

Relative deprivation: When people expect to have something that they don't have, they think they lose that thing, although they don't actually losing it. For example, in a bull market, people near you profit 50% but you just profit 20%.

Door-in-the-face effect: Firstly make a large request that will likely be rejected, then make a modest request. The firstly made large request changes expectation to make the subsequent modest request easier to accept.

Protective pessimism: Being pessimistic can reduce risk of disappointment.

| Be optimistic | Be pessimistic | |

|---|---|---|

| Result is good | Expected. Mild happiness. | Exceeds expectation. Large happiness. 1 |

| Result is bad | Large disappointment. | Expected. Mild disappointment. |

Procrastination is also related to protective pessimism. If you believe that the outcome will be bad, then reducing cost (time and efforts put into it) is "beneficial".

Loss aversion and risk aversion

In real life, some risks are hard to reverse or are irreversible, so avoiding risk is more important than gaining. In investment, losing 10% requires gaining 11.1% to recover, and losing 50% requires gaining 100% to recover.

Keep staying in the game is important, as it makes one exposed to future opportunities.

So, losses have a larger mental impact than gains of the same size. The pain of losing $100 is bigger than the happiness of gaining $100.

Unfortunately, loss aversion make being unhappy easier and make being happy harder.

Relative deprivation is also a kind of loss that people tend to avoid. For example, when the people near one get rich by investing a bubble asset, one may also choose to invest the bubble asset to avoid the "relative loss" between one and others.

It's much easier to increase expectation than to reduce expectation. The knowledge of "better things exist" can be "info hazard", as it makes one harder to accept the things that one gets used to.

Loss aversion doesn't contradict the fact that many people don't care about long-term health or cybersecurity. Because these potential risks are very abstract and unclear.

"Better safe than sorry" assumption

When seeing an unwanted behavior of others, people tend to assume it's malice, according to "better safe than sorry":

- If that unwanted behavior is indeed malice but one don't assume it's malice, then one is in danger.

- If the unwanted behavior is not malice but one assumes it's malice, it may cause missing an opportunity. But it's safer.

But assuming every cue is malice is bad for mental health. There is a saying:

Never ascribe to malice that which is adequately explained by incompetence. (See also)

It's common that: Most people focus on their own businesses. Most people don't remember about details of you. Most people don't pay attention to what you said just once.

Wet bias: Overestimate probability of raining to improve the usefulness of forcast.

Believing a false conspiracy theory often can effectively reduce risk. Conspiracy theories have real utility according to the "better safe than sorry" principle. Similar applies to cynicism.

Bad news travels fast. Tragedy news can gain more attention than happy news:

- Sharing happy news is often seen as bragging or advertisement, because the happy thing applies to other people. But sharing tragedy news signals care and empathy.

- Tragedy news give more information about potential risk. When reading a tragedy, the reader tend to think "why the tragedy happen? what should I do to avoid it?"

- Negaive emotion is more persistent due to loss aversion.

- In a group, sharing bad news caused by group's common enemy can strengthen the social approval in group.

Tragedy stories often feel more "true" than happy stories.

Browsing social media can make one stuck in negative emotions.

For fictions it's different. Popular non-literature fictions often contain the transition from tragedy to happy ending.

Perception of risk

We prefer deterministic gain instead of risky gain. A bird in the hand is worth two in the bush.

Given 100% chance to gain $450 or 50% chance to gain $1000, people tend to choose the former.

The professions that face uncertain gain, like academic research, where it's common that researching a problem for years without getting any meaningful result, are not suitable for most people.

We prefer having hope rather than accepting failure.

Given 100% chance to lose $500 or 50% chance to lose $1100, most people will choose the latter. The second one has "hope" and the first one means accepting failure.

In this case, "no losing" is usually taken as expectation. What if the expectation is "already losing $500"? Then the two choices become: 1. no change 2. 50% gain $500 and 50% lose $600. In this case, people tend to choose the first choice which has lower risk. The expectation point is very important.

Time perception

Telescoping effect:

- In perception, recent time is "stretched". Recent events are recalled to be eariler than the actual time of the event. (backward telescoping)

- In perception, distant past time is "compressed". The events in distant past are recalled as more recent than the actual time. (forward telescoping)

Vierordt's law: Shorter time intervals tend to be overestimated. Longer time intervals tend to be underestimated.

Oddball effect: The time that have novel and unexpected experience feels longer.

It can be seen that we feel time length via the amount of memory. Novel and unexpected experiences correspond to more memory. Forgetting "compresses" time. When people become older, novel experiences become more rare, thus time feels faster.

The memory of feeling risk has higher "weight" (risk aversion), so time feels slower when feeling risk. In contrast, happy time feels going faster.

Reference: Time perception - Wikipedia

Hedonic treadmill

Hedonic treadmill: after some time of happiness, the expectation goes up and happiness reduces. The things that people gained will gradually be taken for granted, and they always pursue for more.

Do not spoil what you have by desiring what you have not; remember that what you now have was once among the things you only hoped for.

― Epicurus

If happiness can be predicted, some happiness moves earlier. For example, one is originally happy when eating delicious chocolate. Then one become happy just after buying chocolate before eating it, and the happiness of actually eating chocolate reduces. In future the happiness can move earlier into deciding to buy chocolate. This effect is also called second-order conditioning.

Material consumption can give short-term satisfaction, but cannot give long-term well-being (paradox of materialism). Long-term well being can better be achieved by sustainable consumption with temperance.

Means-end inversion: one originally want money (means) to improve life quality (end). However, the process of making money can sacrifice life quality. Examples: investing all money and leave little for consumption, or choosing a high-paying job with no work-life balance (golden handcuffs).

We already walked too far, down to we had forgotten why embarked.

A man on a thousand mile walk has to forget his goal and say to himself every morning, "Today I'm going to cover twenty-five miles and then rest up and sleep."

- Leo Tolstoy, War and Peace

Self-serving and self-justification

People tend to maintain their ego by self-serving bias:

Overconfidence

People tend to be overconfident about themselves:

- People overestimate the correctness and rationality of their belief.

- Dunning-Kruger effect: overestimate capability when low in capability, and understimate when high in capability. (Low-capability ones tend to criticize other people's work even though they cannot do the work themselves.)

- Restraint bias: Overestimate the ability of controlling emotion, controlling impulse behaviors and resisting addiction.

- False uniqueness: We tend to think that we have special talents and special virtues.

- Hindsight bias: Overconfident in understanding history and the ability to predict.

- Bias blind spot: People are hard to recognize their own biases.

- An expert in one domain tend to think they are generally intelligent in all domains. (Some intellectual experts don't know they are susceptible to psychological manipulation.)

The overconfidence is sometimes useful:

- Being confident helps persuading others, increasing social impact.

- Self-fulfilling prophecy: being confident makes one more eager to do things and withstand failures. Most success require confidence to overcome failures in the process.

If there is a risky innovation that has only 1% success rate, and if everyone is rational and is not overconfident, then no one will do it. Overconfidence is sometimes beneficial for society.

People are often overconfident in their health condition. After the doctor tell people to exercise more, reduce screen time and reduce eating sugar, they tend to not follow after some time, partially because they are overconfident in their health condition.

Hindsight bias

When looking at past, people find past events (including Black Swan events) reasonable and predictable, although they didn't predicted these events in prior.

In a complex world, one event can have two contradicting interpretations. For example:

- Federal reserve increases interst rate.

- Bearish: it tightens money supply.

- Bullish: it's a sign of strong economy.

- A company reports great profit.

- Bearish: that great profit was anticipted and priced in. The potential is being exhausted.

- Bullish: that company is growing fast.

- A large company buys a startup at high price.

- Bearish: the large company is trapped in mismanagement. It cannot compete with the startup despite having more resources.

- Bullish: the startup's business will synergize with the large company's. It's a strategic move.

People make execuses about their prediction failure, such as:

- See their prediction as "almost" correct. Distort the memory and change the past prediction.

- Blame prediction failure to outside factors, e.g. the statistical data is being manipulated, conspiracy theories.

- Blame that they are just unlucky as the Black Swan event is low-probability. (Black Swan events are rare, but you are still likely to encounter multiple Black Swan events in life.)

Another example: When one don't know an image is AI-generates it looks good. But if one already know it's AI-generated, then many details are seen as "evidence of AI" even if they didn't notice before knowing it's AI.

Fundamental attribution error

- Attribute self success by own characteristics (capability, virtue, etc.).

- Attribute self failure by external factors (luck, situation, etc.).

- Attribute other people's success by external factors.

- Attribute other people's failure by their characteristics.

Self justification

People tend to justify previous behavior, even if these behaviors was made randomly, or made under external factors that does not exist now.

Self justitication shows self-control and consistency, facilitating social collaboration.

This is related to Stockholm Syndrome. After experiencing pain in the past, people tend to justify their previous pain.

Ben Franklin effect: People like someone more after doing a favor for them.

Endowment effect: We value more on the things that we own (including ideas). Investors tend to be biased to positive information of the stock they own. Disaggreing an idea tend to be treated as insult.

Foot-in-the-door effect: One agreed on a small request tend to subsequently agree on a larger request.

Saying becomes believing.

Self-handicapping

People want to show an image of high capability (to both others and self). But a failure can debunk the high-capability-image. Self-handicapping is one way of protecting the image. It's an extension of protective pessimism.

| Try hard | Self-handicap | |

|---|---|---|

| Get good result | Shows a sign of common capability. | Shows a sign of great capability. |

| Get bad result | Shows a sign of low capability. | Can blame failure to self-handicapping. |

Examples of self-handicapping:

- Playing videogames instead of learning before exam.

- Procrastination. Reduce the time finishing the task.

- Refusing help. Refusing medical treatment.

- Drinking alcohol and using drugs.

- Choosing difficult conditions and methods.

When one succeedes despite self-handicapping, it shows great capability. But if one fails, self-handicapping can only protect image to self, not from others. People usually just judge from result and see failed self-handicapping as low capability.

Setting unrealistic high goals is sometimes a form of self-handicapping. But not always.

Self-handicapping is also a way of reducing responsibility. This is common in large corporations and governments: intentionally create reasons of failure to reduce responsibility.

Reverse psychology

People tend to fight the things that oppose their desire. Examples:

- Being disallowed to play videogames makes videogames more fun to play with.

- Being forced to learn makes one dislike learning.

- People tend to gain more interest in the information being banned by government.

- When the love is objected by parents, the love strengthens.

- Restricting buying something make people buy it more eagerly. Same for restricting selling.

Overjustification effect: Providing external reward reduces internal motivation. Training child to clean room by giving money reward will backfire.

Being helped doesn't always elicit gratitude. The one being helped may feel being inferior in social status, thus helping may cause hatred, especially when reciprocal helping cannot be done.

Ironic process theory: Trying to suppress a thought can backfire. In "Don't think about elephant", the sentence literally contains "elephant", so it will provoke thoughts about "elephant". Actively suppressing a thought will fail. But trying to use other things to distract away from a thought is also suppressing, so it will also fail. Ironically, after accepting the thought and stop wanting to kill it, the thought can become boring and weakens.

People love to nitpick others' work. There is a trick: before presenting a solution to client, add obvious minor flaws to the solution. The client will point them out and get more satisfied. (The queen's duck)

Avoid thinking about death

People tend to avoid thinking about inevitable death because it's unpleasant. People may subconsciously feel like they live forever, then:

- People feel like having plenty time to procrastinate

- People tend to not value the present because "life is permanent"

- People focus too much on small problems

Stoicism proposes thinking about death all the time (memento mori). Thinking about death can make one not procrastinate important things, make one value the present and reduce worrying about small problems. But Stocism does NOT propose indulgence and overdrafting the future.

Belief stability

- People tend to keep their belief stable (being stubborn).

- People tend to avoid conflicting beliefs (cognitive dissonance).

- People tend to justify their previous behavior. Behavior can shape attitudes.

- People have a tendency to pursuade others by their belief (meme spread).

Confirmation bias: People tend to seek and accept the evidences that confirm their beliefs, and reluctant to accept contradictory evidences.

Confirmation bias may make one pay attention to the wrong thing. Pay attention to unimportant thing but ignore the significant thing. It can "manipulate" the perception.

Motivated reasoning: when they does not want to accept contradictory evidences, they may make up and believe in non-falsifiable explanations to explain the evidence in a way that follows the original belief.

Examples of non-falsifiable explanations:

- "There is [a secret evil group] that controls everything. You don't see evidence of its existence because it's so powerful that it hides all evidences."

- "The AI doesn't work on your task just because you prompted it wrongly." (without telling how to "prompt correctly".)

- "You defend yourself so hard because you know you are guilty." (Kafka trap)

- "Absolute free-market capitalism is the only correct path. All problems of market are caused by the market being not free enough." ("free enough" is a very high standard that can never be reached)

With confirimation bias, more information increases confidence, but doesn't lead to better understanding.

If you don't have an opinion, resist the pressure to have one.

- N. N. Taleb, Link

Information cocoon (echo chamber): People tend to actively choose to digest the information source that they like, and make friends with the one having similar beliefs.

Another thing I think should be avoided is extremely intense ideology, because it cabbages up one’s mind. ...

I have what I call an iron prescription that helps me keep sane when I naturally drift toward preferring one ideology over another. And that is I say “I’m not entitled to have an opinion on this subject unless I can state the arguments against my position better than the people do who are supporting it. I think that only when I reach that stage am I qualified to speak.”

- Charlie Munger

Belief bias: if the conclusion confirms people's existing belief, then people tend to believe it, regardless of the reasoning correctness, vice versa.

Bullshit asymmetry principle: Refuting misinformation is much harder than producing misinformation. With AI, it's easy to generate seemingly-plausible bullshit. To check or refute a misinformation, you need to find sound evidences. This is also reversal of the burden of proof.

The good side of stubborness is to maintain diversity of ideas in a society, helping innovation and overcoming of unknown risks.

Group justification and system justification

People tend to justify the groups they belong (group justification), and justify the society that they are in (system justification).

Examples:

- An environmental activist may justify other environmental activists' illegal behaviors, because they are deemed in the same group.

- A middle-class tend to believe "the poor are lazy" and "the wealthy work harder".

Urge to persuade others

People love to correct others and persuade others. Some ideas are memes that drive people to spread the idea. Correcting others also provide superiority satisfaction.

However, due to belief stability, it's hard to persuade/teach others. People dislike being persuaded/teached. This effect is common on internet social media.

The trouble with having an open mind, of course, is that people will insist on coming along and trying to put things in it.

- Terry Pratchett

Cunningham's Law: The best way to get the right answer on the internet is not to ask a question; it's to post the wrong answer.

People often try hard to show they are smart. But pretending to be stupid (being humble) is sometimes useful:

- Can easily correct mistakes. No need to waste efforts justifying mistakes.

- Letting others teach you can increase their favorability to you.

- Decrease others' expectation on you. They will be more surprised when you deliver good results.

- Reduce unnecessary competition.

Sunk cost fallacy

Commitment can be a good thing. A lot of goals require continual time, efforts and resources to achieve.

However, there are investments that turn out to be bad and should be given up to avoid futher loss. All the previous investments become sunk cost. People are reluctant to give up because they have already invested a lot in them. Doing stop-loss signals failure. We want to have hope rather than accepting failure.

Examples:

- Keep watching a bad movie because you paid it and already spent time watching it.

- Keeping an unfulfilling relationship because of the past commitments.

Opportunity cost: if you allocate resource (time, money) to one thing, that resource cannot be used in other things that may be better. Opportunity cost is not obvious.

The difference between "good persistence" and "bad obstinacy":

- Persistent people keep their original root goal. They are happy to make corrections on exact methods for achieving the root goal. They can accept failure of sub-goals.

- Obstinate people keep both the root goal and the exact method to achieve the goal. Suggesting them to change the exact method is seen as offending their self-esteem.

The persistent are like boats whose engines can't be throttled back. The obstinate are like boats whose rudders can't be turned. ...

The persistent are much more attached to points high in the decision tree than to minor ones lower down, while the obstinate spray "don't give up" indiscriminately over the whole tree.

- Paul Graham, The Right Kind of Stubborn

An environment that doesn't tolerant failure makes people not correct mistakes and be obstinate on the wrong path (especially in authoritarian environments, where loyalty and execution attitude override honesty).

When you’re in the midst of building a product, you will often randomly stumble across an insight that completely invalidates your original thesis. In many cases, there will be no solution. And now you’re forced to pivot or start over completely.

If you’ve only worked at a big company, you will be instinctually compelled to keep going because of how pivoting would reflect on stakeholders. This behavior is essentially ingrained in your subconscious - from years of constantly worrying about how things could jeopardize your performance review, and effectively your compensation.

This is why so many dud products at BigCos will survive with anemic adoption.

Instead, it’s important to build an almost academic culture of intellectual honesty - so that being wrong is met with a quick (and stoic) acceptance by everyone.

There is nothing worse than a team that continues to chase a mirage.

- Nikita Bier, Link

Drip pricing: Only show extra price (e.g. service fee) when the customer has already decided to buy. The customer that already spent efforts in deciding tend to keep the decision.

Ostrich effect

Ignoring negative information or warning signs to avoid psychological discomfort.

Examples:

- Not wanting to diagnose health problem.

- Reluctant to check the account after an investment failed.

Self-deception

Robert Trivers proposes that we deceive ourselves to better deceive others:

- If one tries to deceive others without internally believing in the lie, the brain need to process two pieces of conflicting information, which takes more efforts and is slower.

- When one knows one is telling lie, one may unable to control the nervousness, which can show in ways like heart beat rate, face blush, body movement, etc. Deceiving self before deceiving others can avoid these nervousness signals.

Saying becomes believing. Telling a lie too many times may make one truly believe in it.

Quick simplified understanding

We can learn from the world in an information-efficient way: learning from very few information quickly. 2

The flip side of information-efficient learning is hasty generalization. We tend to generalize from very few examples quickly, rather than using logical reasoning and statistical evidence, thus easily get fooled by randomness.

The reality is complex, so we need to simplify things to make them easier to understand and easier to remember. However, the simplification can get wrong. There is too much information. We have some heuristics for filtering information.

To simplify, we tend to make up reasons of why things happen. A reasonable thing is simpler and easier to memorize than raw complex facts. This process is also compression. 3

Hasty generalization

Examples:

- See a few rude peoples in one city, then conclude that "people from that city are rude".

- People who only live in one country think that some societal issue is specific to the country that they are in. In fact, most societal issues apply to most countries.

- Illusion of control: A gambler may have the illusion that their behavior can control the random outcomes after seeing occasional coincidents.

People tend to see false pattern from random things. This effect is apophenia.

Related: most people cannot actually behave randomly even if they try to be random. An example: Aaronson Oracle.

Frequency matching

If there are two lights, the first flashes in 70% probability and the second flashes in 30% probability. When asked to predict which light flashes next, people tend to try to find patterns even if the light flash is purely random, having correct rate about 58%.

People tend to do frequency matching, the predictions also contain 70% first light and 30% second light.

But in that lab experiment enviornment, the light flash is purely random and the probability stays the same, so the optimal strategy is to not try to predict and always choose the first which has larger probability, having correct rate 70%.

Reference: The Left Hemisphere’s Role in Hypothesis Formation

Although the strategy of always choosing the highest-probability choice is optimal in that lab experiment environment, it's not a good strategy in the complex changing real world:

- Making different choices can increase exploration and help discovering new things. Only making one decision reduces exploration.

- In real world, the distribution may change and the highest-probability choice may change. Always choosing the same choice can be risky, especially when the opponent can learn your behavior.

- In real world, many things have patterns, so pattern-seeking may be useful.

- In real world, the "good" is often multi-dimensional. Overly optimizing for one aspect often hurt other aspects. Not choosing the seemingly optimal choice may have hidden benefits.

Confusing correlation as causation

When statistical analysis shows that A correlates with B, the possible causes are:

- A caused B.

- B caused A.

- Another factor, C, caused A and B. (confounding variable)

- Self-reinforcement feedback loop. A reinforces B. B reinforces A. Initial random divergence gets amplified.

- A selection mechanism that favors the combination of A and B (survivorship bias).

- More complex interactions.

- The sampling or analyze is biased.

Examples of correlation of A and B are actually driven by another factor C:

- The children wearing larger shoe has better reading skills: both driven by age. Just wearing a large shoe won't make the kid smarter.

- Countries with more TVs had longer life expectancy: both driven by economy condition. Just buying a TV won't make you live longer.

- Ice cream sales increases at the same time drowning incidents increase: both driven by summer.

Among my favorite examples of misunderstood fitness markers is a friend of a friend who had heard that grip strength was correlated with health. He bought one of this grip squeeze things, and went crazy with it, eventually developing tendonitis.

- Paul Kedrosky, Link

Narrative fallacy

Narrative fallacy is introduced in The Black Swan:

We like stories, we like to summarize, and we like to simplify, i.e., to reduce the dimension of matters.

......

The fallacy is associated with our vulnerability to overinterpretation and our predilection for compact stories over raw truths. It severely distorts our mental representation of the world; it is particularly acute when it comes to the rare events.

- The Black Swan

Narrative fallacy includes:

- People tend to make the known facts reasonable, by finding reasons or making up reasons. This can be seen as an information compression mechanism (reasonable facts are easier to remember).

- People prefer simpler understanding of the world. This is also information compression. This includes causal simplification, binary thinking.

- People tend to believe in concrete things and stories other than abstract statistics. This is related anecdotal fallacy.

Nominal fallacy

Nominal fallacy: Understand one thing just by its names. Examples:

- Knowing that LLM has "temperature" so think LLM is heat-based algorithm.

- Knowing that LLM has "token" so think LLM is a Web3 crypto thing.

- Thinking that "chip packaging" is just to put chip into a package. It's actually a complex process.

Outcome bias

People like to judge a decision by its immediate result. However, the real world is full of randomness. A good plan may yield bad result and a bad plan may yield good result. And the short-term result can differ to long-term result.

There is no perfect strategy that will guarantee success. Overemphasizing short-term outcomes leads to abandoning good strategies prematurely.

Delayed feedback issue and learning

The quicker the feedback gives, the quicker people can learn (this also applies to reinforcement learning AI). But if the feedback delays 6 months, it's hard to learn from it, and people may do wrong hasty generalization using random coincidents, before the real feedback comes, thus get fooled by randomness.

When feedback comes early, its correlation with previous behavior is high, having high signal-to-noise ratio. If feedback comes late, many previous behaviors may correlate with it, so feedback has low signal-to-noise ratio, and there are less feedback signals.

Reducing cost by removing safety measures usually does not cause any visible accidents in the short run, but the benefit of reduced costs are immediately visible. When the accident actually happened because of the removed safety measures, it may be years later.

People crave quick feedback. Successful video games and gambling mechanisms utilize this by providing immediate responses to actions.

What's more, for most people, concrete visual and audio feedback is more appealing than abstract feedback (feedback of working with words and math symbols).

The previously mentioned reverse psychology is also related to learning. Being forced to learn make one dislike learning it. Self-directed learning make one focus on what they are interested in, thus is more effective.

To summarize, most people naturally prefer the learning that:

- Has quick feedback.

- Has concrete visual and audio feedback, instead of abstract feedback.

- Is self-directed rather than forced.

It's also hard to learn if the effect of decision is applied to other people, especially for decision-makers:

It is so easy to be wrong - and to persist in being wrong - when the costs of being wrong are paid by others.

- Thomas Sowell

Causal simplification

People tend to simplify causal relationship and ignore complex nuance. If X is a factor that causes Y, then people tend to treat X as the only reason that caused Y, over-simplifying causal relationship.

Usually, the superficial effect is seen as the reason, instead of the underlying root cause.

Examples of causal oversimplification:

-

Oversimplification: "Poor people are poor because they are lazy."

Other related factors: Education access, systematic discrimination, job market conditions, the Matthew effect, etc.

-

Oversimplification: "Immigrants caused unemployment."

Other related factors: Manufacturing relocation, automation technologies, economic cycles, education, etc.

-

Oversimplification: "The Great Depression happened because of the stock market crash of 1929."

Other related factors: Immature financial regulation, debt accumulation, production overcapacity, reduced demand caused by wealth inequality, international trading imbalances, etc.

-

Oversimplification: "That company succeeded because of the CEO."

Other related factors: Employee contributions, impact of previous CEOs, luck, etc.

For every complex problem there is an answer that is clear, simple, and wrong.

- H. L. Mencken

People often dream of a "silver bullet" that simply magically works:

- People hope that a "secret advanced weapon" can reverse the systematic disadvantage in war. This almost never happens in real world.

- Hoping that a secret recipe or a secret techonology alone can succeed.

- Coca Cola succeedes not just by the "secret recipe". The brading, global production system and logistic network are also important.

- Modern technologies are complex and have many dependencies. You cannot just simply copy "one key techonology" and get the same result. Even just imitating existing technology often requires a whole infrastructure, many talents and years of work.

The good and fundamental ideas are often simple. But not all simple ideas are good or fundamental.

Also, revolutionary ideas seems outlandish at frist. But not all outlandish ideas are revolutionary.

Also, if an idea is vague enough, then it can be applied to almost everything. These vague ideas looks fundamental and provides metal satisfaction, but are not useful in actual practice.

Binary thinking

We tend to simplify things. One way of simplification is to ignore the grey-zone and complex nuance, reducing things into two simple extremes.

Examples of binary thinking:

- "That person is a good person." / "That person is a bad person."

- "You're either with us or against us.", "Anything less than absolute loyalty is absolute disloyalty."

- "Bitcoin is the future." / "Bitcoin is a scam".

- "This asset is completely safe." / "This bubble is going to collapse tomorrow."

- FOMO (fear of missing out) / risk averse.

- "No one understands it better than me." / "I don't understand even a tiny bit of it."

- "It's very easy to do" / "It's impossible."

- The idol maintains a perfect image. / Image collapse, true nature exposes.

- "We will win quickly." / "We will lose quickly."

- "I can do it perfectly." / "I cannot do it perfectly so I will fail."

- "[X] is the best thing and everyone should use it." / "[X] has this drawback so it's not only useless but also harmful."

- "Market is always fully effective." / "Market is never effective."

- Doesn't admit tradeoffs exist.

People's evaluations are anchored on the expectation, and not meeting an expectation could make people's belief turn to another extreme.

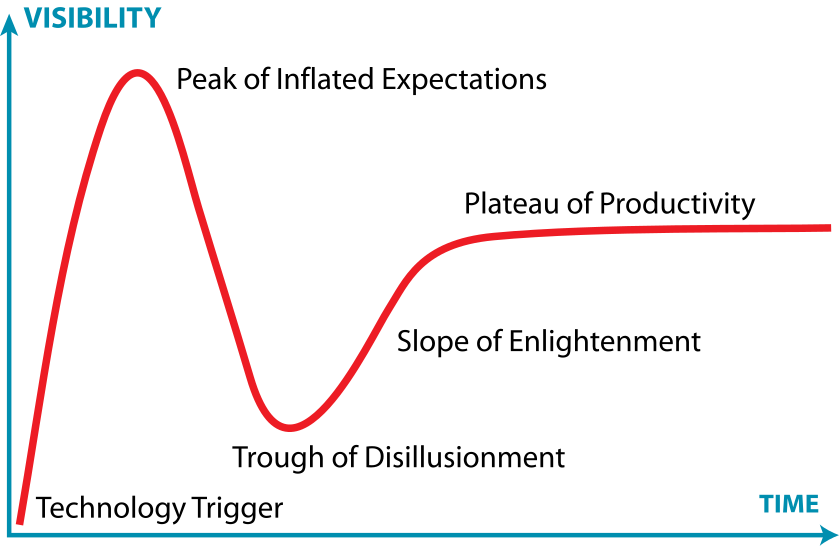

By 2005 or so, it will become clear that the Internet's impact on the economy has been no greater than the fax machine's.

- Nobel Prize-winning economist, Paul Krugman, in 1998

Internet has indeed changed the world. But the dot com bubble burst. It's just that the power of Internet required time to unleash, and people placed too much expectation in it too early.

Neglect of probability: either neglect a risk entirely or overreact to the risk.

The absolute hardest thing to convince people of is that the optimal amount of fraud in a system is not zero. Obviously it would be ideal if there were no fraud, but at some point the cost of catching it outweighs the benefits.

- Megan McArdle, Link

Between two opposing groups, proposing middle ground will often be seen as enemy by both sides.

We often underestimate the time and efforts required to do one thing (due to Dunning-Kruger effect etc.). When that thing cannot be done in estimated time and efforts, binary thinking may make us overestimate the difficulty and give up.

Strawman argument is a technique in debating: refute a changed version of opponent's idea. It often utilizes binary thinking: refute a more extreme version of opponent's idea (also: slippery slope fallacy). Examples:

- A: "We should increase investment for renewable energy." B: "You want to ban oil, gas, and coal, removing millions of jobs and crash the economy?"

- A: "The history curriculum should include more perspectives to present a more objective and nuanced view of our nation." B: "So you want to rewrite history to make our children hate their own country?"

- A: "We should implement stricter gun control." B: "It's useless, because no matter how strict it is, criminals will always find a way to get guns illegally." (perfect solution fallacy)

Halo effect and horn effect

Halo effect: Liking one aspect of a thing cause liking all aspects of that thing and its related things.

Examples:

- A person falling in love thinks the partner is flawless.

- Thinking that a beautiful/handsome person is more intelligent and kind.

- A person that likes one Apple product thinks that all designs of all Apple products are correct and superior.

- When one likes one opinion of a political candidate, one tend to ignore the candidate's shortcomings.

Horn effect is the inverse of halo effect: if people dislike one aspect of a thing, they tend to dislike all aspects of that thing and its related things. People tend to judge words by the political stance of the person who said it.

Disaggrement on ideas tend to become insults to people.

Halo effect and horn effect are related to binary thinking.

Need for closure

People prefer definite answer, over ambiguity or uncertainty (such as "I don't know", "it depends on exact case", "need more investigation"), even if the answer is inaccurate or made up.

This is related to narrative fallacy: people like to make up reasons explaining why things happen.

One day in December 2003, when Saddam Hussein was captured, Bloomberg News flashed the following headine at 13:01: U.S. TREASUERIES RISE; HUSSEIN CAPTURE MAY NOT CURB TERROISM. ......

As these U.S. Treasury bonds fell in price (they fluctuate all day long, so there was nothing special about that) ...... they issued the next bulletin: U.S. TREASURIES FALL; HUSSEIN CAPTURE BOOSTS ALLURE OF RISKY ASSETS.

- The Black Swan

People dislike uncertain future and keep predicting the future, while ignoring their terrible past prediction record.

People like to wrongly apply a theory to real world, because applying the theory can give results. Example: assuming that an unknown distribution is gaussian even when it's not.

Zeigarnik effect: People focus on uncompleted things more than completed things. When some desire is not fulfilled (gambling not winning, PvP game not winning, browsing social media not seeing wanted content, etc.), the desire becomes more significant. This effect can cause one not wanting to sleep.

Need for closure is also related to curiosity.

Idealization of the unfamiliar

People may idealize the things that they are not familiar with:

- People may idealize their partner, until living with the parter for some time.

- "The grass is greener on the other side" (Greener grass syndrome).

- Assuming that another career/lifestyle/country (that you are not familar with) is better than the current one.

People tend to idealize the distant past and forget the past misery. This helps people get out of trauma, and at the same time idealize the past things:

- After a long time since bearing a child, women tend to forget the pain of bearing a child and may want another child.

- After decades passed since the collapse of Soviet Union, some people remember more of the good aspects of the Soviet Union.

Illusion of understanding

People may think that they deeply understand something, until writing it down. When writing it down, the "gaps" of the idea will be revealed.

Pure thinking is usually vague and incomplete, but people overestimate the rationality of their pure thinking.

The reason I've spent so long establishing this rather obvious point [that writing helps you refine your thinking] is that it leads to another that many people will find shocking. If writing down your ideas always makes them more precise and more complete, then no one who hasn't written about a topic has fully formed ideas about it. And someone who never writes has no fully formed ideas about anything nontrivial.

It feels to them as if they do, especially if they're not in the habit of critically examining their own thinking. Ideas can feel complete. It's only when you try to put them into words that you discover they're not. So if you never subject your ideas to that test, you'll not only never have fully formed ideas, but also never realize it.

- Paul Graham, Link

Even so, writing the idea down may be still not enough, because natural language is vague, and vagueness can hide practical details. The issues hidden by the vagueness in language will be revealed in real practice.

Having ideas is easy and cheap. If you search the internet carefully you are likely to find ideas similar to yours. The important is to validate and execute the idea.

People fall in love with ideas because ideas never fight back. Execution does. It exposes your blind spots, your patience, your habits and your excuses. Most founders learn more from the first week of doing than the first year of imagining.

- Hiten Shah, Link

Dunning-Kruger effect also applies to idea generation. An unexperienced one tend to think that their ideas are all good. But an experienced one sees that most ideas fails. Incompetent leaders often criticize experienced workers being not "creative" enough.

About analogy: Analogies are useful for explaining things to others, but not good for accurate thinking. It makes one ignore the nuance difference between the analog and the real thing.

Predictive processing

According to predictive processing theory, the brain predicts (hallucinates) the most parts of perception (what you see, hear, touch, etc.). The sensory signals just correct that prediction (hallucination).

Body transfer illusion (fake hand experiment)

Free energy principle: The brain tries to minimize free energy.

Free energy = Surprise + Change of Belief

- Surprise is the difference between perception and prediction.

- Change of Belief is how much belief changes to improve prediction.

The ways of reducing free energy:

- Passive: Change the belief (understanding of the world).

- Active: Use action (change environment, move to another environment, etc.) to make the perception better match prediction. 4

Survivorship bias

Survivorship bias means that only consider "survived", observed samples and does not consider "silent", "dead", unobserved samples, neglecting the selection mechanism of samples.

A popular image of survivorship bias:

Other examples of survivorship bias:

- Most gamblers are initially lucky, because the unlucky ones tend to quit gambling early.

- Assume that many fund managers randomly pick stocks. After one year, some of the lucky ones have good performance, while others are overlooked. In the short term, you cannot know whether success come from just luck.

- "Taleb's rat health club": Feeding poison to rats increases average health, because the unhealthy ones are more likely to die from poison.

- Social media has more negative news than positive news. Bad news travels fast.

- The succeded research results are published and the failed attempts are hidden (P-hacking).

- Only special and interesting events appear on news. The more representative common but not newsworthy events are overlooked.

A more generalized version of survivor bias is selection bias: When the sampling is not uniform enough and contains selection mechanism (not necessary 100% accurate selection), there will be bias in the result.

The opinions on social media does not necessarily represent most peoples' view. There are several selection mechanisms in it: 1. not all people use the same social media platform 2. the people using social media may not post opinions 3. not all posted opinions will be seen by you due to algorithmic recommendation.

Some physicists propose Anthropic Principle: the physical laws allow life because the existence of life "selects" the physical law. The speciality of the physical laws come from survivorship bias.

What people don't do is as important as what people do. The negative advices (what not to do) are as important as positive advices (what to do). The experiences of failed ones are also important, not just succeeded ones.

Availability bias

Availability bias: When thinking, the immediate examples that come into mind plays a big role.

Example: If you recently saw a car crash, you tend to think that traveling by car is riskier than traveling by plane. However, if you recently watched a movie about a plane crash, you might feel that planes are more dangerous.

Nothing in life is as important as you think it is when you are thinking about it.

- Daniel Kahnman

Vividness bias: People tend to believe more from vivid things and stories, over abstract statistical evidences. This is related to anecdotal fallacy and narrative fallacy.

The Italian Toddler: In the late 1970s, a toddler fell into a well in Italy. The rescue team could not pull him out of the hole and the child stayed at the bottom of the well, helplessly crying. ...... the whole of Italy was concerned with his fate ...... The child's cries produced acute pains of guilt in the powerless rescuers and reporters. His pictures was prominently displayed on magazines and newspapers .....

Meanwhile, the civil war was raging in Lebanon ...... Five miles away, people were dying from the war, citizens where threatened with car bombs, but the fate of the Italian child ranked high among the interests of the population in the Christian quarter of Beirut.

- The Black Swan

Enforcing safety measures is usually unappreciated. Because people only see the visible cost and friction caused by safety measures (concrete), and do not see the consequences of not applying safety measures in a parallel universe (abstract), until an incident really happens (concrete).

People are more likely to pay terrorism insurance than for plain insurance that covers terrorism and other things.

If people are given some choices, people tend to choose one of the provided choices and ignore the fact that other choices exist. This is also framing effect.

People tend to attribute one product to one public figure, or attribute a company to its CEO, because that's the name that they know, and because of causal simplification tendency.

People often think the quality of new movies/games/novels declines, worse than the ones produced in "golden age" before. However it's mainly due to people only remember good ones and neglect the bad ones filtered by time.

Interestingly, LLMs also seem to have availability bias: the information mentioned before in context can guide or mislead subsequent output. The knowledge that's "implicit" in LLM may be suppressed by context.

When reviewing a document, most reviews tend to nitpick on the most easy-to-understand places, like diagram, or summarization, while not reading subsequent text that explain the nuances.

When judging on other people's decisions, people often just see visible downsides and don't see it's a tradeoff that avoids larger downsides.

Agenda-setting theory: what media pay attention to can influence people's attention, then influence people's opinions.

Saliency bias: We pay attention to the salient things that grab attention. The things that we don't pay attention to are ignored. Attention is a core mechanism of how brain works 5.

"Blind" outside of attention

When people pay attention to one thing, they tend to ignore things that are outside of attention.

Invisible gorilla test: when subject is asked to count things in basketball match, they ignore the special one wearing gorilla suit.

Prior belief (confirmation bias) can affect perception. This not only affects recognition of objects, but also affects reading of text. Under confirmation bias, when reading text, one may skip important words subconsciously.

In software UX: if the user is focused on finishing a task, when the software pops up a dialog, the user tends to quickly close the dialog to continue the task, without reading text in dialog. 6

Anecdotal fallacy

People tend to believe more from stories, anecdotes or individual examples, even if these examples are made up or are just statistical outlier. On the contrary, people are less likely to believe in abstract statistical evidences.

Examples:

- "Someone smoked their entire life and lived until 97, so smoking is actually not that bad."

- "Someone never went to college and turned out to be successful, so college is a waste of time and money."

- "Someone made a fortune trading cryptocurrency, and so can I."

- "It was the coldest winter on record in my town this year. Global warming can't be real." 7

Familiarity bias

People prefer familiar things. One reason is the availability bias. Another reason is that people self-justifys their previous attention and dedication. This is highly related to availability bias.

When making decisions, people tend to focus on what they already know, and ignore the aspects that they do not know or are not familiar with. We have already considered what we already know, so we should focus on what we don't know in decision making.

This is related to risk compensation: People tend to take more risk in familiar situations.

Imprinting: At young age, people are more likely to embrace new things. At older age, people are more likely to prefer familiar things and avoid taking risk in unfamiliar things. (Baby duck syndrome).

- Anything that is in the world when you’re born is normal and ordinary and is just a natural part of the way the world works.

- Anything that's invented between when you’re 15 and 35 is new and exciting and revolutionary and you can probably get a career in it.

- Anything invented after you're 35 is against the natural order of things.

- Douglas Adams

Frequency illusion

Noticing something more frequently after learning about it, leading to overestimating its prevalence or importance.

Sometimes, one talked about something then sees its ad in social media, thus suspecting that their phone and social media app is recording voice for ad recommendation. Of course that possibility exists, but perception of that possibility is exaggerated by frequency illusion.

Representativeness bias

People tend to judge things by comparing it with examples (stereotypes) that come into mind, and tend to think that one sample is representative to the whole group.

Representative bias can sometimes be misleading:

Say you had the choice between two surgeons of similar rank in the same department in some hospital. The first is highly refined in appearance; he wears silver-rimmed glasses, has a thin build, delicate hands, measured speech, and elegant gestures. ...

The second one looks like a butcher; he is overweight, with large hands, uncouth speech, and an unkempt appearance. His shirt is dangling from the back. ...

Now if I had to pick, I would overcome my sucker-proneness and take the butcher any minute. Even more: I would seek the butcher as a third option if my choice was between two doctors who looked like doctors. Why? Simply the one who doesn’t look the part, conditional on having made a (sort of) successful career in his profession, had to have much to overcome in terms of perception. And if we are lucky enough to have people who do not look the part, it is thanks to the presence of some skin in the game, the contact with reality that filters out incompetence, as reality is blind to looks.

- Skin in the game

Note that the above quote should NOT be simplified to tell that "the unprofessional-looking ones are always better". It depends on exact case.

Gambler's fallacy

When an event has occured frequently, people tend to believe that it will occur less frequently in the future.

Examples:

- When tossing coin, if head appear frequently, people tend to think tail will appear frequently. (If the coin is fair and tosses are statistically independent, this is false. If the coin is biased, it's also false.)

- When a stock goes down for a long time, people tend to think it will be more likely to rise.

One related topic is the law of large numbers: if there are enough samples of a random event, the average of the results will converge. The law of large numbers focus on the total average, and does not consider exact order.

The law of large number works by diluting unevenness rather than correcting unevenness. For example, a fair coin toss will converge to 1/2 heads and 1/2 tails. Even if the past events contain 90% heads and 10% tails, this does not mean that the future events will contain more tails to "correct" past unevenness. The large amount of future samples will dilute the finite amount of uneven past samples, eventually reaching to 50% heads.

Actually, gambler's fallacy can be correct in a system with negative feedback loop, where the short-term distribution changes by past samples. These long-term feedback loops are common in nature, such as the predator-prey amount relation. It also appears in markets with cycles. (Note that in financial markets, some cycles are much longer than expected, forming trends.) In a PvP game with Elo-score-based matching mechanism, losing makes make you more likely to win in the short term.

One related concept is regression to the mean, meaning that, if one sample is significantly higher than average, the next sample is likely to be lower than the last sample, and vice versa. Example: if a student's score follows normal distribution with average 80, when that student gets 90 scores, they will likely to get a score worse than 90 in the next exam.

The difference between gambler's fallacy and regression to the mean:

- Gambler's fallacy: if the past samples deviate to mean, assume the distribution of future samples change to "compensate" the deviations. This is wrong when the distribution doesn't change.

- Regression to the mean: if the last sample is far from the mean, the next sample will likely to be closer to the mean than the last sample. It compares the next sample with the last sample, not the future mean with the past mean.

Regression fallacy: after doing something and regression to the mean happens, people tend to think what they do caused the effect (hasty generalization). Example: the kid gets a bad score; parent criticizes; the kid then get a better score. It's seen that criticizing makes the score get better, although this is just regression to the mean that can happen naturally.

Conjunction fallacy

People tend to think that more specific and reasonable cases are more likely than abstract and general cases.

Consider two scenarios:

- A: "The company will achieve higher-than-expected earnings next quarter."

- B: "The company will launch a successful new product, and will achieve higher-than-expected earnings next quarter."

Although B is more specific to A, thus have a lower probability than A, people tend to think B is more likely than A. B implies a causal relationship, thus look more reasonable.

People tend to think that a story with more details is more plausible, and treat probability as plausibility. A story with more details is not necessarily more plausible, as the details can be made up.

Making a story more reasonable allows better information compression, thus making it easier to remember and recall.

Curse of knowledge

People often assume that others know what they know. So people often omit important details when explaining things, causing problems in communication and teaching.

When learning a new domain of knowledge, it's beneficial to ask "stupid questions". These "stupid questions" are actually fundamental questions, not stupid at all. But these fundamental questions are seen as stupid by experts, under curse of knowledge.

One benefit of AI is that you can ask "stupid questions" without being humiliated by experts (but be wary of hallucinations).

If a "stupid question" doesn't have a sound answer, then maybe something important is overlooked by everyone.

Simplicity is often confused by familiarity. If one is very familiar with a complex thing, they tend to think that thing is simple.

Curse of knowledge also applies when using AI. If the user assumes AI knows their work detail and don't tell such information to AI, AI tend to output generic useless things. It's recommended to put knowledge of your work (including failed attempts) into a document. It not only reduces your memory pressure but also can be read by AI.

Normalcy bias

Normalcy bias: Thinking that past trend will always continue. This is partially due to confirmation bias.

Although the market has trends, and a trend may be much longer than expected, no trend continues forever. Anything that is physically constrained cannot grow forever.

Most people are late-trend-following in investment: not believing in a trend in the beginning, then firmly believing in the trend in its late stage. This is dangerous, because the market has cycles, and some macro-scale cycles can span years or even decades. The experiences gained in the surge part of the cycle are harmful in the decline part of the cycle and vice versa.

First impression effect (primacy effect)

People tend to judge things by first impression. This makes people generate belief by only one observation, which is information-efficient, but can also be biased.

Recency bias

Overemphasizing recent events, while ignoring long-term trends.

People tend to

- overestimate the short-term effect of a recent event, and

- underestimate the long-term effect of an old event.

This is related to Amara's law: we tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.

This is also related to availability bias, where the just-seen events are more obvious and easier to recall than old events and non-obvious underlying trends.

Normalcy bias means underreact to new events, but recency bias means overreact to new events, which is the opposite of normalcy bias. These two are actually not conflicting. Which one takes effect initially is related to actual situation and existing beliefs (confirmation bias). When one person does not believe in a trend but the trend continued for a long time, binary thinking may make that person turn their belief 180 degrees and deeply believe in the trend.

Relation between recency effect and primacy effect:

- One firstly sees A, then after a long time, then sees B, recency effect tells that B has higher influence than A.

- One firstly sees A, then sees B, then after a long time, primacy effect tells A has higher influence than B.

Framing effect

People tend to make decisions based on how information is presented (framed) rather than objective facts.

There are many ways to frame one fact. For example, one from positive aspect, one from negative aspect:

- "90% of people survive this surgery" / "10% of people die from this surgery".

- "99.9% effective against germs" / "Fails to kill 0.1% of germs".

- "You are the hero of your own story" / "No one is coming to help you".

The wording of a thing can affect how people perceive it. Examples:

- "Gun control" / "Gun safety"

- "Government subsidy" / "Using taxpayer money"

- "Risk measurement" / "Risk forecast"

- "Necessary trade-off" / "Sacrifice"

- "Flood of refugees" / "Exodus"

- "Be rejected" / "Dodged a bullet"

The content creator could emphasize one aspect and downplay another aspect, and use different wording or art style to convey different opinions. The people reading the information could be easily influenced by the framing subconsciously.

A loaded question is a question that contains an assumption (framing). Following that assumption can lead to a biased answer. Example: "Do you support the attempt by the US to bring freedom and democracy to other places in the world?"

The current LLMs are mostly trained to satisfy the user. If you ask LLM a loaded question that has a bias, the LLM often follow your bias to please you.

Asking the right question requires the right assumption.

Mehrabian's rule: When communicating attitudes and feelings, the impact is 7% verbal (words), 38% vocal (tone of voice), 55% non-verbal (facial expressions, gestures, posture). Note that this doesn't apply to all kinds of communications.

Just looking confident can often make other people believe. This even applies when the talker is AI:

A friend sent me MRI brain scan results and I put it through Claude. No other AI would provide a diagnosis, Claude did. Claude found an aggressive tumour. The radiologist report came back clean. I annoyed the radiologists until they re-checked. They did so with 3 radiologists and their own AI. Came back clean, so looks like Claude was wrong. But looks how convincing Claude sounds! We're still early...

- Link

Anchoring bias: People's judgement may be influenced by reference "anchors", even if the reference anchor is irrelevant to decision making. Anchoring is a kind of framing. A salesman may firstly show customers an expensive product, then show cheap products, making customers feel the product being cheaper, utilizing anchoring bias.

The Anchoring Bias and its Effect on Judges.

Decoy effect: Adding a new worse option to make another option look relatively better.

Lie by omission: A person can tell a lot of truth while omitting the important facts, stressing unimportant facts (wrong framing), intentially causing misunderstanding, but at the same time be not lying in literal sense.

Sometimes an example or a diagram can be misleading, due to lie by omission. If there are 2 possible cases, but the diagram only draw first case, then the diagram viewer may subconciously ignore possibly of the second case.

The price chart is often drawn by making lowest price at the bottom and highest price at the top. The offset and scale of the chart is also framing. If one stock already have fallen by 30%, the latest price is in the bottom of the chart, so the stock seems cheap when looking at the chart, but it may actually be not cheap at all, and vice versa.

Reversal of burden of proof: One common debating technique is to reverse the burden of proof to opponent: "My claim is true because you cannot prove it is false." "You are guilty because you cannot prove you are innocent."

PowerPoint (keynote, slide) medium is good for persuading, but bad for communicating information. PowerPoint medium encourages author to omit imformation instead of writing details. Amazon bans PowerPoint for internal usage. See also: Columbia Space Shuttle Disaster, Military spaghetti powerpoint.

Analogies also utilize framing bias. For example: "National deficit is like a credit card bill" / "National deficit is like a business investment".

Some media often do quoting out of context (断章取义). Natural language is often vague and the meaning highly depends on context. Removing context can easily cause misleading understanding. This also utilizes framing bias.

Two talking styles

Two different talking styles: the charismatic leader one and the intellectual expert type:

| Charismatic leader talking style | Intellectual expert talking style |

|---|---|

| Confident and assertive. (doesn't fear of being wrong) | Conservative and rigorous. (fear of being wrong) |

| Persuades using narratives and emotions (more effective to most people) | Persuades using expert knowledge and evidence (less effective to most people) |

| Create hope and mission | Warn about tradeoffs and possible risks |

| Often take risk and bear responsibility. Often make decisions quickly using intuition and simple logic | Often conservative and hesitate in taking risk and bearing responsibility |

Note that the above are two simplified stereotypes. The real cases may be different.

Related: A good leader should be insistent when the leader is sure it's correct. A good leader should be open-minded when not sure. A bad leader pretends to be nice when knowing sure it's wrong. A bad leader become insecurely aggressive when being challenged for things the leader is not sure.

Blame the superficial

"Shooting the messenger" means blaming the one who bring the bad news, even though the messenger has no responsibility of causing the bad news.

The same effect happens in other forms:

- Blaming the journalist exposing the bad things in society.

- Refuse medical treatment, because medical treatment reminds illness and show weakness.

- In corporation, the responsibility of solving a problem usually belongs to the one raising the problem, not the one creating the problem.

Imagine someone who keeps adding sand to a sand pile without any visible consequence, until suddenly the entire pile crumbles. It would be foolish to blame the collapse on the last grain of sand rather than the structure of the pile, but that is what people do consistently, and that is the policy error. ...

As with a crumbling sand pile, it would be foolish to attribute the collapse of a fragile bridge to the last truck that crossed it, and even more foolish to try to predict in advance which truck might bring it down. ...

Obama’s mistake illustrates the illusion of local causal chains - that is, confusing catalysts for causes and assuming that one can know which catalyst will produce which effect.

- The Black Swan of Cairo; How Suppressing Volatility Makes the World Less Predictable and More Dangerous

Scarcity heuristic

People tend to value scarce things even they are not actually valuable and undervalue good things that are abundant.

Examples:

- When an online learning material is always there, people have no pressure to learn and often just bookmark it.

- A thing that's sold in a time-limited or amount-limited way is deemed to be valuable.

- Restrict buying something make people buy it more eagerly even when they don't need that thing. Same as restricting some information may increase people's perceived value of that information.

People tend to value something only after losing it.

Health is forgotten until it’s the only thing that matters.

- Bryan Johnson, Link

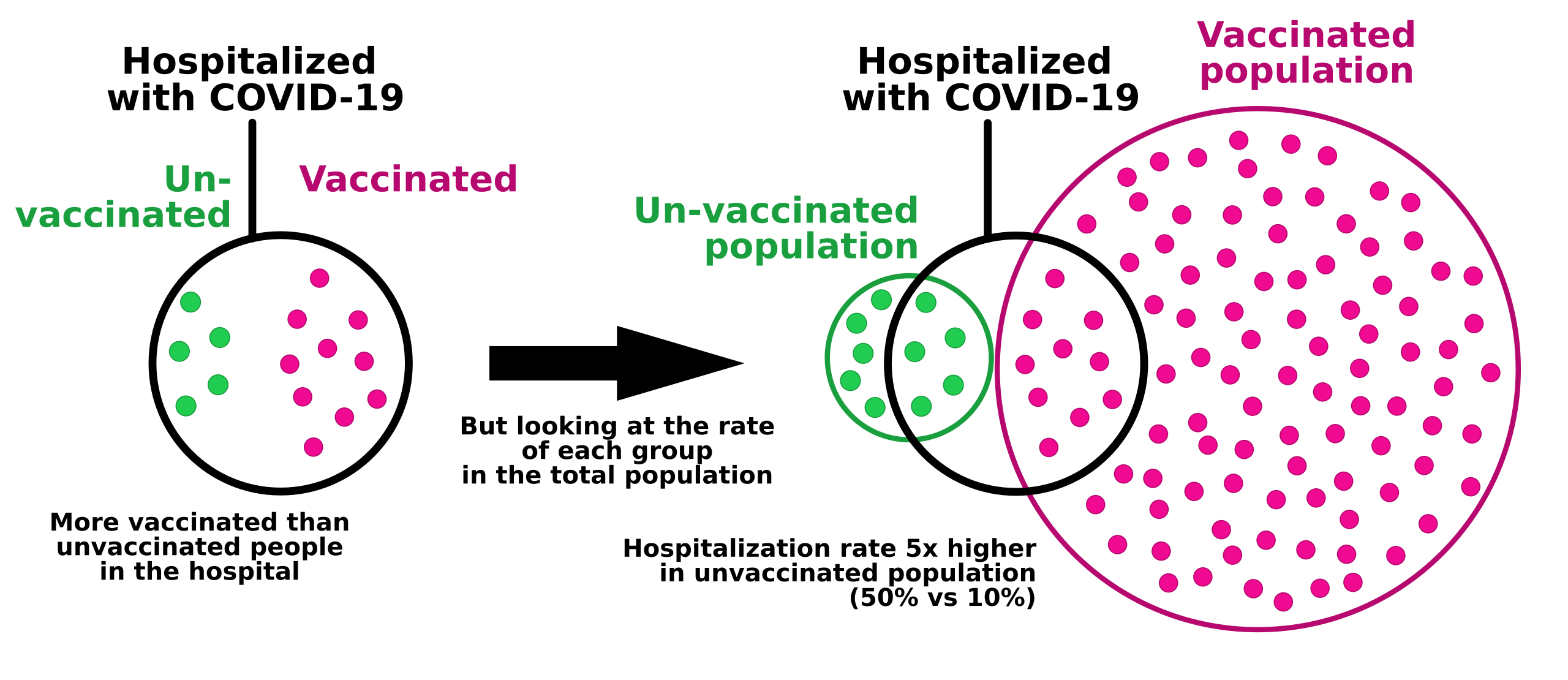

Simpson's paradox and base rate fallacy

The correlation of overall samples may be contradictory to the correlation inside each sub-groups.

Examples:

- In the COVID-19 pandemic, a developed country have higher overall fatality rate than a developing country. But in each age group, the developed country's fatality rate is lower. The developed country has a larger portion of old population.

- After improving a product, the overall customer satisfaction score may decrease, because the product gets popular and attracted the customers that don't fit the product, even though the original customers' satisfaction score increases.

- You post on internet something that 90% people like and 1% people hate. The people liking the post usually don't direct-message you. But the people hating it often have strong motivation to direct-message you. So your direct message may contain more haters than likers, even though most people like your post.

Base rate fallacy: there are more vaccinated COVID-19 patients than un-vaccinated COVID-19 patients in hospital, but that doesn't mean vaccine is bad:

In these cases, confounding variable correspond to which subgroup the sample is in. Statified analysis means analyzing separately in each subgroup, controlling the confounding variable.

False consensus (echo chamber, information cocoon)

When one person is in a small group with similar opinions, they tend to think that the general population have the similar opinions. When they encounter a person that disagrees with them, they tend to think the disagreer is minority or is defective in some way.

This effect is exacerbated by algorithmic recommendation of social medias.

We also tend to think other people are similar to us in some ways. We learn from very few examples, and that few examples include ourselves.

We don't see things as they are. We see things as we are.

Priming

We use relations to efficiently query information in memory. The brain is good at looking up relations, in an automatically, unintentionally and subconscious way.

Being exposed to information makes human recognize similar concepts quicker. Examples:

- Reminding "yellow" makes recognizing "banana" faster.

- Reminding "dog" makes recognizing "cat" faster.

Being exposed to information also changes behavior and attitudes. Examples:

- Being more likely interpret things as danger signals after watching a horror movie.

- Red in food packaging increases people's intention to buy it.

- Being familiar with a brand after exposed to its ads, even after trying to ignore ads.

- Sleeper effect: After exposed to persuation, people that don't initially agree may gradually agree after time passes.

The main moral of priming research is that our thoughts and our behavior are influenced, much more than we know or want, by the environment of the moment.

- Think, fast and slow

Note that the famous "age priming" effect (walk more slowly after reminding aging concepts) failed to be replicated.

The placebo effect is also possibly related with priming.

Slot machines have a mechanism: losses disguised as wins (LDWs). When gambler wins, the machine shows fancy lights and plays sounds, stimulating the gambler. But when the gambler slightly losses, the machine still give light and sound stimulus, creating a feeling of win. Then gambler then feels win more than actual wins.

Spontaneous trait transfer: listeners tend to associate what the talker say to the talker, even when talker is talking about another person:

- If you praise another person, the listeners tend to subconsciously think that you are also good.

- If you say something bad about another person, the listeners tend to subconsciously think you are also bad.

Flattering subconsciously increase favorability, even when knowing it's flattering (this even applies to sycophant AI). Saying harsh criticism subconsciously reduce favorability, even when knowing the criticism is beneficial. Placebo still works even when knowing it's placebo.

Efficient decision making

When making decisions, human tend to follow intuitions, which is quick and energy-efficient, but also less accurate.

- Often quickly making decision before having complete information is better than waiting for complete investigation.

- Sometime multiple decisions both can fulfill the goal. The important is to quickly do action, rather than which decision is optimal.

Thinking, Fast and Slow proposes that human mind has two systems:

- System 1 thinks by intuition and heuristics, which is fast and efficient, but inaccurate and biased.

- System 2 thinks by rational logical reasoning, which is slower and requires more efforts, but is more accurate.

Most thinking mainly uses System 1 while being unnoticed.

Emotion overrides rationality

With intense emotion, the rationality (System 2) is being overridden, making one more likely to make mistakes.

Some examples:

- When being criticized, the more eager you are trying to prove you correct, the more mistake you may make.

- The trader experiencing loss tend to do more irrational trading and lose more money.

Being calm can "increase intelligence".

When one is in intense emotion, logical argument often has little effect in persuading, and emotional connection is often more effective.

Default effect

People tend to choose the default and easiest choice. Partially due to laziness, partially due to fear of unknown risk.

In software product design, the default options in software plays a big role in how user will use and feel about the software. Increasing the cost of some behavior greatly reduces the people doing that behavior:

- If a software functionality require manually enabling it, much fewer users will know and use that functionality.

- Just 1 second longer page load time may reduce user conversion by 30%. Source

- Each setup procedure will frustrate a portion of users, making them give up. A good product requires minimal configuration to start working.

Sometimes, if doing something is 10% more difficult, then 50% fewer people will do it, vice versa. It's non-linear.

Ask for no, don’t ask for yes. When asking others to approve something they didn't plan, they tend to not approve or delay approving, as the approver bears responsibility. Just proceed by default and ask for no holds more control and bears more responsibility.

Software UX design should avoid confronting user with a must-be-made decision. Making decision consumes mental efforts and gives feeling of risk. The software should have a reasonable default and let user to customize on demand.

Status quo bias: tend to maintain status quo. This is related to risk aversion, as change may cause risk.

A related concept is omission bias: People treats the harm of doing something (commision) higher than the harm of not doing anything (omission). Doing things actively bears more responsibility. In the trolley problem, not doing anything reduces perceived responsibility.

If there is an option to postpone some work, the work may eventually never be done.

Path dependence: sticking to what worked in the past and avoid changing, even when the paradigm has shifted and the past successful decisions are no longer appropriate.

I think people's thinking process is too bound by convention or analogy to prior experiences. It's rare that people try to think of something on a first principles basis.

They'll say, "We'll do that because it's always been done that way." Or they'll not do it because "Well, nobody's ever done that, so it must not be good."

But that's just a ridiculous way to think.

You have to build up the reasoning from the ground up - "From the first principles" is the phrase that's used in physics. You look at the fundamentals and construct your reasoning from that, and then you see if you have a conclusion that works or doesn't work, and it may or may not be different from what people have done in the past.

- Elon Musk

Law of the instrument: "If the only tool you have is a hammer, it is tempting to treat everything as if it were a nail."

We shape our tools, and thereafter our tools shape us.

Action bias

Action bias: In the places where doing action is normal, people prefer to do something instead of doing nothing, even when doing action has no effect or negative effects.

When being judged by other people, people tend to do action to show their value, productivity and impression of control:

- A personal doctor may do useless medications to show they are working. (Antifragile argues that useless medications are potentially harmful. It's naïve interventionism.)

- A politician tend to do political action to show that they are working on an affair. These policies usually superficially helps the problem but doesn't address the root cause, and may exacerbate the problem. One example is to subsidize house buyers, which makes housing price higher, instead of building more houses.

- Financial analysts tend to give a definitive result when knowing there isn't enough sound evidence.

For high-liquidity assets (e.g. stocks), people tend to do impulsive trading when market has volatility. But for low-liquidity harder-to-trade assets (e.g. real estate) people tend to hold when the market has volatility.

Action bias does not contradict with default effect. When one is asked to work and show value, doing action is the default behavior, and not doing action is more risky, as people tend to question the one that does not look like working.

It's not the things you buy and sell that make you money; it's the things you hold.

- Howard Marks

Also, when under pressure, people tend do make actions in hurry before thinking, which increase the chance of making mistakes.

Prioritizing the easy and superficial

Law of least effort: people tend to choose the easiest way to do things, choosing path of least resistance. 8